In the last 2 posts about morality, I looked at moral statements being:

- based on empathy

- not provable from non-moral statements

- being non-arbitrary because of their evolutionary history

Today I’ll look at what this means for actual morality talk. The first point is that morality seems to require both empathy and reason. From the first post, without empathy, I don’t see how reason alone can do the job in building up moral statements. Without reason on the other hand all we have are our gut feelings with nothing to build a robust system from. For instance we know that many animals have considerable empathy, so the restrictions in their moral systems probably come from their limits of reasoning, generalisation and extrapolation.

It is also interesting to note that empathy and reason are probably not completely distinct but both are strongly associated with the evolution of a general-purpose intelligent system. Thus, for a system to be able to easily reason about others, a nice shortcut is it if simulates the reactions/feelings of others (ie. empathises with them). The converse relation holds too: in order to empathise with someone properly, you must be able to work out the consequences for them amidst a complex range of possibilities (ie. reason it out). So, we have two elements, both evolved, both non-arbitrary and both not provable externally.

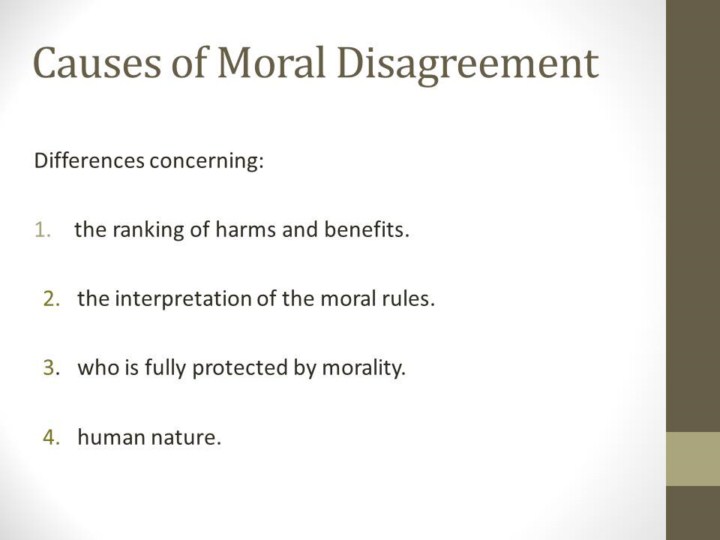

So how do we account for disagreements? This was one of the main things brought up in the comments. Let’s take as a specific example, whether we should implement the death penalty. The disagreements to be had about the death penalty probably fall in 2 categories:

The first category is disagreement about facts, eg. whether the death penalty deters crime, what the chances are of being wrongly executed etc. For this to be a real disagreement, both parties have to agree on the same basic moral statements (driven by empathy) — if they don’t then it makes no sense to argue the mechanics of it. Of course these disagreements fall squarely into the reason hemisphere and can be resolved objectively, since the values themselves are in common.

The interesting thing is that there is an objective right answer for a given set of values. For instance if we have certain criteria of what makes for a good society, then there is a fact of the matter about whether having or not having the death penalty will make for a better society. Usually we can only estimate this (since we can’t do a control experiment keeping all other variables the same) but it IS important that an answer exists.

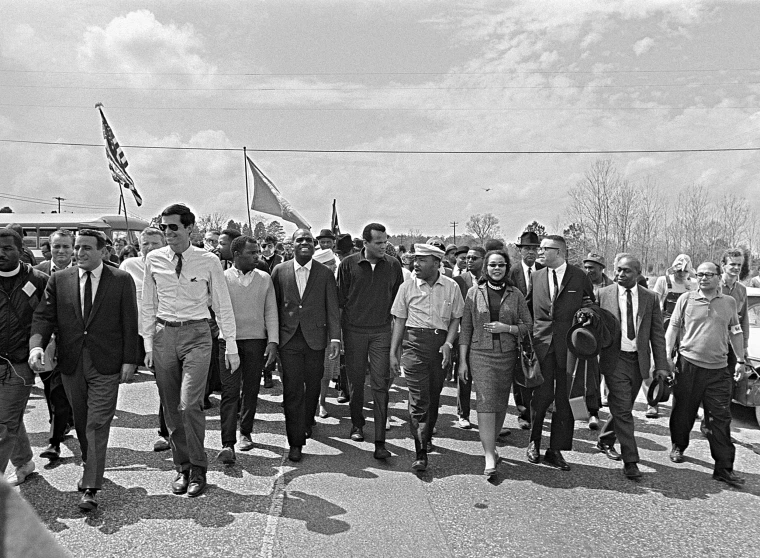

You might poo-poo this saying that it’s missing the whole point of moral disagreements by reducing these to something trivial. But I think that a surprising number of moral disagreements (probably a majority) fall squarely in the category of which path gives the best result for a set of values we all agree on. And it shouldn’t be surprising, since our empathetic nature is very similar, what with us being very biologically similar — whereas it’s the world that’s complex making the actual factual decisions difficult.

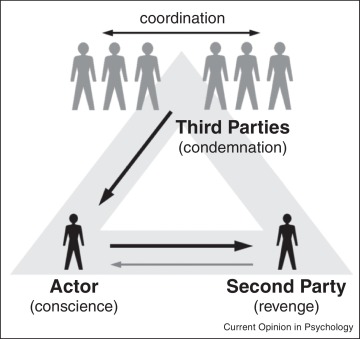

Of course there is the other side which is disagreement about values. For the death penalty, it might be the question of whether personal retribution is a legitimate (and important) consideration. So this might be a truly intractable position where two people start from different biologically-driven atomic moral propositions. A lot of the time this stems from the interplay of harm and disgust that I’ve blogged about before. There actually seem to be 5 basic elements: (harm, fairness, loyalty, respect, purity/disgust), see this TED talk for details.

These arethe 5 types of moral statements given as a gift by evolution to our “gut” and these are the ones we struggle to prioritise in those moral disagreements that don’t depend on facts. The importance each of these holds is what’s not provable (the subjective part of morality) but even so, just like the more basic concept of blanket empathy, these are non arbitrary. A normal person does not consistently prioritise disgust over harm: someone who fails to save a person drowning in shit would be labeled a sociopath.

It is the fact that these 5 are wired with reasonable variation that’s the source of those types of disagreements. However, even here, there is still a healthy range — there should be no need to convince any normal person that harm should take priority over anything else*.

So I think there’s a counterintuitive conclusion: morality is not provable but neither is it arbitrary. If we simply follow our biological tools of reason and empathy (not like we have a choice anyway!), by breaking down arguments into facts and values, decomposing the values according to the 5 intuitions and so on we can even arrive at situations where you can show how something follows, assuming you’re not arguing with a sociopath. This is as close to objective morality we can come but by jolly, I think we should take it.

It might seem that something is missing from this, that this account is missing some “key” ingredient that makes morality morality. More on this later but this is probably our false intuition talking — the one that thinks morality is somehow magical with an “essence of good” that permeates good deeds. But this is the same intuition that thinks living beings have an “essence of life” and minds have a “soul”…

*Of course evolution also gifted us with inconsistencies and blindspots in these, for instance the trolley problem. This is where the fun has been for all these centuries. The best I can propose is a transhumanist solution: once we can manipulate ourselves we can come up with a sane priority system for values and then implement it consistently.

0 Comments